Fuelling polarisation is Putin’s favourite tactic. It’s time to push back

Funds that have been moved from Russia since Putin's invasion of Ukraine should now be used to preserve Western democracy and strengthen the fight against disinformation, writes Keith Mauppa.

We have witnessed a rare moment of unity across the board when it comes to a unanimous opposition to Putin's war. In the next test of democracies across the globe, we should aim at investing in the companies that will build social cohesion, not exacerbate social division.

This is how we will, at least in part, combat the war on democracy. The latest manifestation of this being Putin's War – the one that began with election hacking and bot farms, disinformation campaigns and sewing division. It's the slow, stealth war that the Kremlin has been waging for years, added and abetted by the decentralisation of media, fragility and interdependence of the global internet infrastructure, and exponential growth of social media platforms. Cybercrime, hacking, and disinformation have arguably now become formalised industries that embrace innovation.

The concept of fake news first received significant attention during the 2016 US Presidential election, and has since become a problem on a global scale, impacting everything from politics to commerce to medicine. This had tragic consequences during the COVID-19 pandemic, when in the first three months of 2020, nearly 6000 people around the world were hospitalised because of coronavirus misinformation and at least 800 people may have died due to misinformation related to COVID-19. And that was just the tip of the iceberg.

The World Health Organization calls this an 'infodemic'. An overabundance of information – some of which is accurate and some of which is not – that spreads like wildfire alongside a disease outbreak, discrediting the threat, pedalling conspiracy theories, false cures and the like. It breeds anxiety, distrust and fear and it leads to loss of life. In the United States, almost 80 per cent of people reported seeing fake news on the coronavirus outbreak, 78 per cent believed or were unsure about at least one false statement, and nearly a third believed at least four of eight false statements tested.

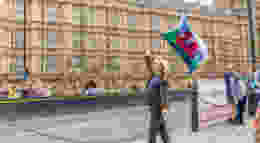

Perhaps the damage wrought by disinformation during the course of the pandemic has begun to hit home. In the US, half of adults (48 per cent) say their government should take steps to restrict false information, even if it means losing some freedom to access and publish content, up from 39 per cent in 2018. Furthermore, the share of adults who say freedom of information should be protected – even if it means some misinformation is published online – has decreased from 58 per cent to 50 per cent. In the UK, the Government's Online Safety Bill continues to make its way through parliament, with one aspect of it threatening business with fines of 10 per cent of their global turnover if they do not take adequate steps to protect users from fake information.

This growing awareness of the scale and consequences of such widespread disinformation and propaganda is a step in the right direction. But we also have a fundamental problem with business models that rely on outrage over unity, businesses that thrive because of the echo chamber effect.

A social media echo chamber typically forms on a person's social media account when they get all their news and political discourse from that social media platform, for example Facebook, and all their Facebook friends hold the same views. Social media sites foster confirmation bias because of their basic function. Regardless of the specific algorithm, social media sites serve the same basic function: to connect groups of like-minded users together based on shared content preferences.

The algorithms are designed to ensure a biased, tailored media experience and that experience is very often one that precludes opposing viewpoints and eliminates dissenting opinions. Social media companies rely on adaptive algorithms to assess our interests and flood us with information that will keep us scrolling. They focus on what we 'like', retweet, and share to keep feeding us content that is similar to what we've indicated makes us comfortable.

In short, they ensure we see only the news that fits our preferences. The end result is that people find themselves in a comfortable, self-affirming but epistemic bubble. Those bubbles have dangerous real-world consequences that are playing out in increasingly divided and polarised communities, where the lines between fact and fiction are not just blurred, they have all but disappeared.

These are problems that threaten the reputation of businesses, endanger public health and safety, undermine governments and even topple democracies. As a society, we need to redouble our efforts with regards to innovation and entrepreneurship that promotes a freer, fairer, more prosperous society. There are interesting organisations actively working to address these societal problems using market & non market based methods (the latter particularly so when it comes to entities tackling disinformation).

Organisations focused on detecting and reducing disinformation are offering numerous solutions to a broad spectrum of stakeholders, from corporations to governments. In terms of media literacy and fact checking, South African-based non-profit Africa Check, fact-checks claims made by public figures, institutions, and the media and holds entities accountable for their rhetoric. NewsGuard provides a browser extension to indicate the quality of news sources found online, quality scores are based on the news source's trust ratings. Weird Enough Productions provides comics and lesson plans to teachers to increase news and social media literacy among pupils.

Businesses interested in protecting their brands online are turning to start-ups that monitor digital assets to identify and quash reputational threats. Examples of these companies include: Yonder, which helps companies track online conversations about their brands to ensure accuracy and avoid manipulation. BrandShield is providing protection solutions using machine learning to find infringement, counterfeits, and abuse online. Bolster monitors a company's brand online and provides automated take-downs of counterfeit and phishing websites. In April 2020, the company announced a program geared specifically toward helping non-profits that are struggling to cope with phishing and scam attacks, which have increased during the pandemic.

There is also great work being done in the area of malicious content and bot detection.The AI Foundation, alerts users of potentially fake media by analysing content using AI-driven tools and crowdsourcing. Backed by Google's Digital News Initiative, Deeptrace is focused on detecting and taking down altered or fake video content. UK-based bot detection company Astroscreen is employing machine learning to spot bots involved in disinformation campaigns.

For years there has been division at the heart of liberal democracies. From Brexit to the rise of populism in Europe, to Trumpism and the riots at the US Capitol, Putin understands that exacerbating these divisions weakens western democracy. Perhaps now is the time to channel all the dry powder and newly divested assets we used the money coming out of Russia to really tackle the disinformation and propaganda that have become the scourge of our democracies.